The LLM Interaction node is designed to leverage the power of large language models (LLMs) to process prompts, generate responses, and perform complex tasks. This node allows you to define a prompt, configure model behavior, and retrieve the output for use in subsequent steps of your tool. It is highly customizable, enabling you to fine-tune the interaction to meet your specific requirements.

Overview

The LLM Interaction node enables communication with a large language model by providing a prompt and receiving a response. It is ideal for tasks such as text generation, summarization, question answering, and more. The node includes two main sections:

-

Prompt: Where you define the input for the LLM.

-

Settings: Where you configure the behavior of the LLM, such as creativity, temperature, and privacy settings.

Key Components

Prompt

The Prompt section is where you define the input for the LLM. This is the core of the interaction, as it determines what the LLM will process and respond to.

-

Prompt Textbox: Enter the instructions or query for the LLM. Be as specific as possible to achieve the desired output. For example:

Write a professional email to a client apologizing for a delayed delivery. Include an offer for a discount on their next purchase.Prompt Tips: The interface provides helpful tips to guide you in crafting effective prompts:

-

What you want to accomplish.

-

Any particular requirements or constraints.

-

Your preferred style or format.

-

-

JSON Mode: Toggle the JSON Mode switch to enable structured output. This is useful for tasks that require a specific JSON schema or structured data.

-

Important: If JSON Mode is enabled, the JSON format should also be indicated in the prompt or at least included as part of the instructions. For example:

Generate a JSON object with the following structure: { "name": "string", "age": "integer", "email": "string" }This ensures the LLM understands the expected output format and generates a valid JSON response.

-

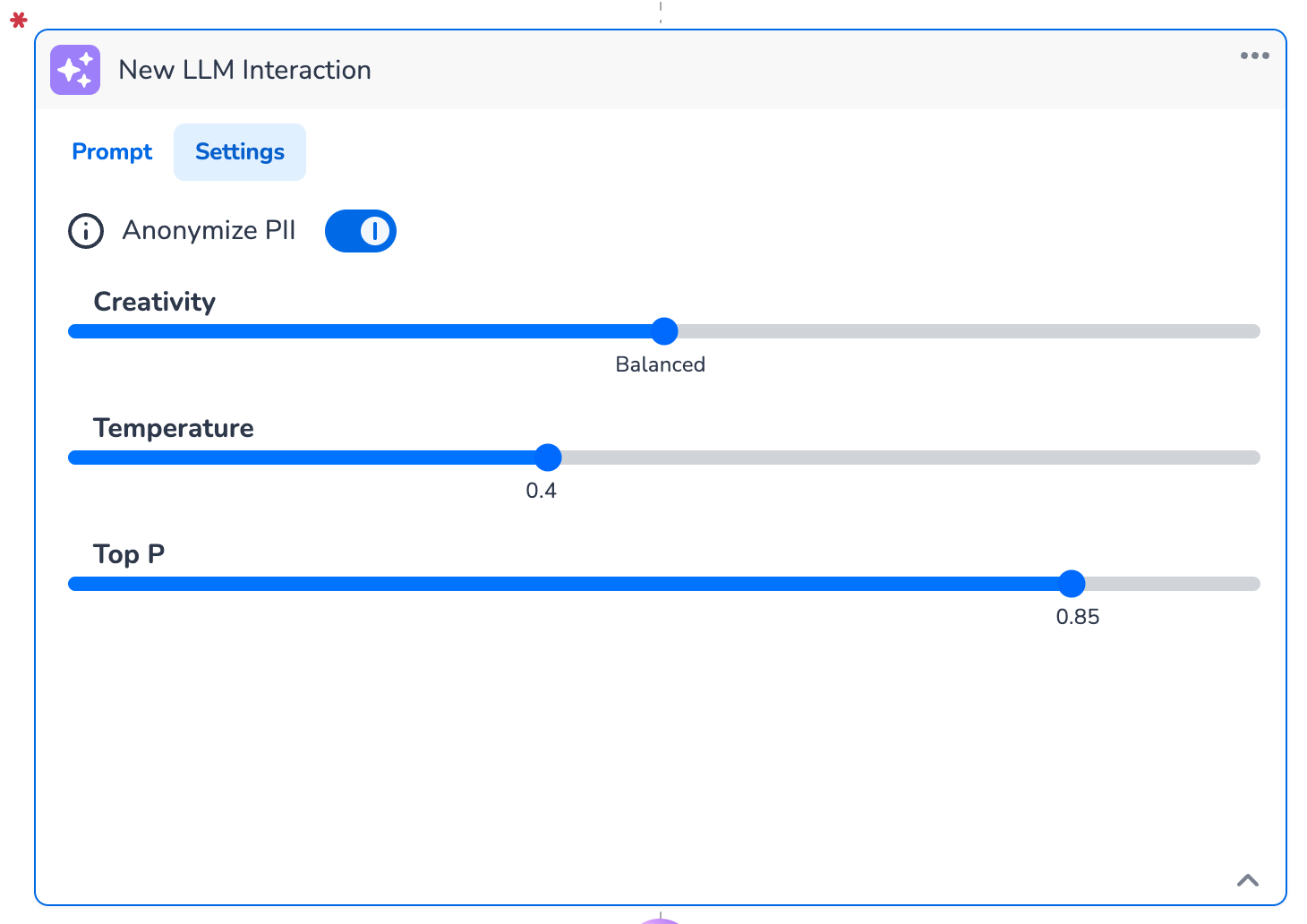

Settings

The Settings section allows you to configure the behavior of the LLM, giving you control over how the model generates responses.

Anonymize PII

-

Toggle the Anonymize PII switch to enable or disable the anonymization of personally identifiable information (PII) in the LLM's response. When enabled, sensitive data such as names, email addresses, and phone numbers will be anonymized.

-

The following data is anonymized:

-

Phone number

-

Email address

-

IBAN code

-

Credit card number

-

Password

-

VAT number

-

Dutch BSN

-

Dutch passport number

-

Creativity

-

Adjust the Creativity slider to control the balance between deterministic and creative responses:

-

Low Creativity: The LLM will generate more predictable and factual responses.

-

High Creativity: The LLM will generate more imaginative and diverse responses.

-

Temperature

-

The Temperature slider controls the randomness of the LLM's output:

-

Lower Values (e.g., 0.2): The model will produce more focused and deterministic responses.

-

Higher Values (e.g., 0.8): The model will produce more varied and creative responses.

-

Top P

-

The Top P slider controls the nucleus sampling, which limits the model's output to the most probable tokens:

-

Lower Values (e.g., 0.5): The model will consider fewer options, resulting in more focused responses.

-

Higher Values (e.g., 0.9): The model will consider a broader range of options, resulting in more diverse responses.

-

<!-- Daily excerpt sync refresh -->